Creating a Covid-19 Dashboard with bokeh, pandas, numpy, etc.

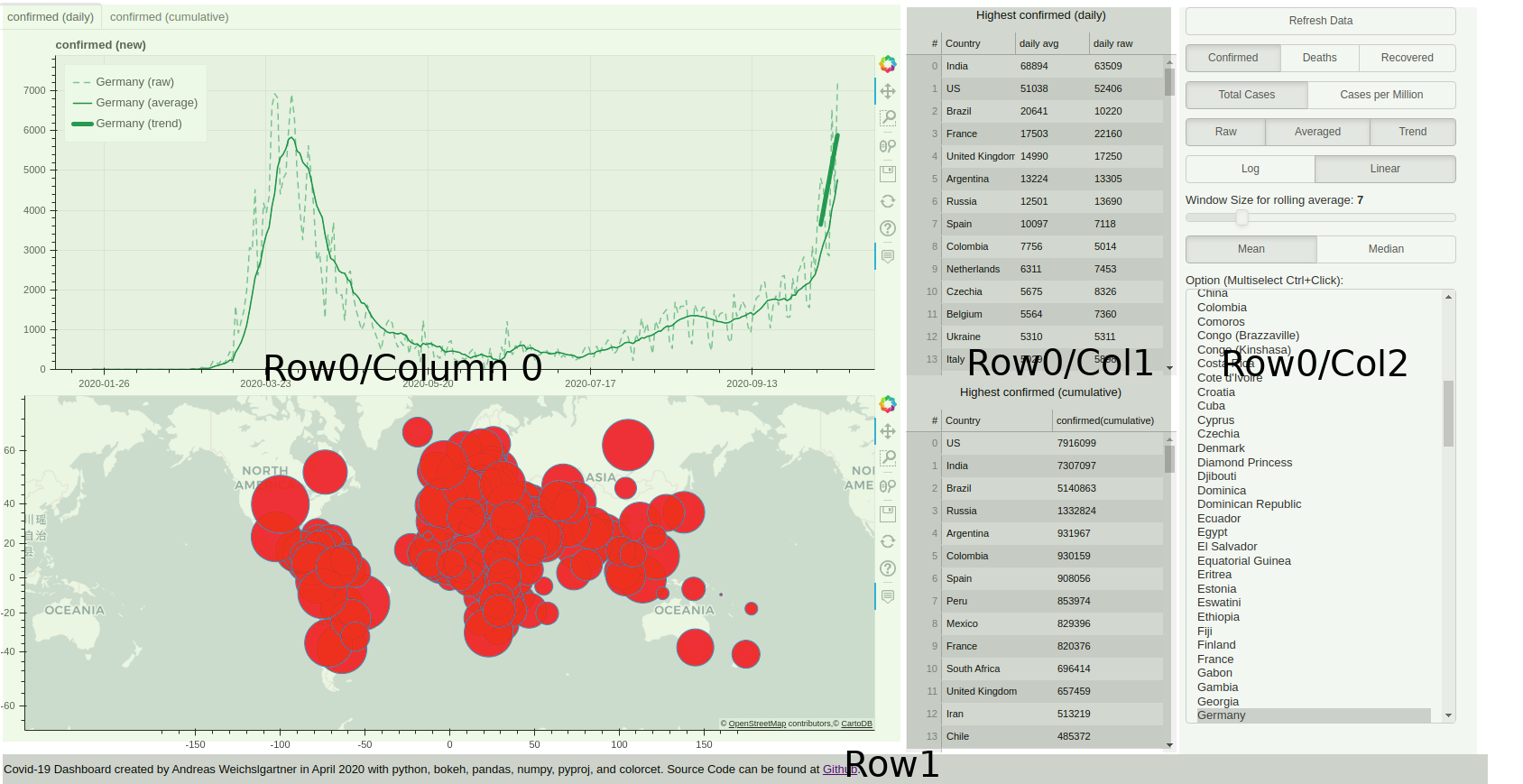

There is a plethora of Covid-19 dashboards in the depths of the internet. However, they often let you not play around with the data and the parameters. So why not just build out own and customize it to our preferences. For interactive plots and visualization I love to work with bokeh. Without having to write javascript you can create everything you need for a dashboard and creates javascript output which can be rendered nicely in your browser. The final product looks like this and the entire source code can be found at Github:

An interactive version is hosted at Heroku. Before we dive into the bokeh stuff, we take first a look at the data. Afterwards, I also explain briefly how you can use the Rest-Api and host a Python app for free at Heroku.

The Data

The root of all visualization is always the data. Thankfully, the Johns Hopkins University (JHU) offers raw data in csv format at their github account. So what is the first thing you do if you have csv files? You fire up a jupyter notebook (personally I use jupyter lab) and explore the data with pandas and some numpy.

So let’s get started and import these two wonderful libraries:

import pandas as pd

import numpy as np

Then we use the the raw urls from Github.

url_confirmed = 'https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_time_series/time_series_covid19_confirmed_global.csv'

url_death = 'https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_time_series/time_series_covid19_deaths_global.csv'

url_recovered = 'https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_time_series/time_series_covid19_recovered_global.csv'

These files are updated daily, so we get the latest version. In pandas you can directly paste the URL into the read_csv function and load the remote file into a dataframe.

df = pd.read_csv(url_confirmed)

With the head() we can get the first rows of the dataframe and see how the data is structured.

df.head()

| Province/State | Country/Region | Lat | Long | ... | 10/14/20 | 10/15/20 | 10/16/20 | 10/17/20 | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | NaN | Afghanistan | 33.93911 | 67.709953 | ... | 39994 | 40026 | 40073 | 40141 |

| 1 | NaN | Albania | 41.15330 | 20.168300 | ... | 15955 | 16212 | 16501 | 16774 |

| 2 | NaN | Algeria | 28.03390 | 1.659600 | ... | 53584 | 53777 | 53998 | 54203 |

| 3 | NaN | Andorra | 42.50630 | 1.521800 | ... | 3190 | 3190 | 3377 | 3377 |

| 4 | NaN | Angola | -11.20270 | 17.873900 | ... | 6846 | 7096 | 7222 | 7462 |

5 rows × 274 columns

So we have columns with the “Province/State”, “Country/Region”, the GPS coordinates and then the number of absolute confirmed cases. Each day is one column and JHU will add one column every day.

To get names of the confirmed cases columns we get all column names with df.columns and ignore the first four columns.

case_columns=df.columns[4:]

Now, lets plot the data of a single country.

First, we get the index of the wanted row and then select the values with the loc[index,[columns]] access.

A little plot in the end and we have our first simple visualization.

german_index = df.loc[df['Country/Region']=='Germany'].index[0]

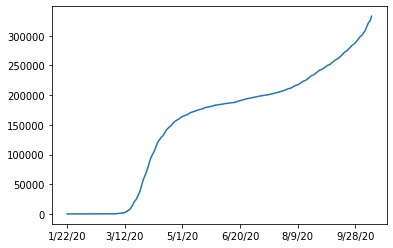

df.loc[german_index,case_columns].plot()

So we see that the data is cumulative and always increasing.

To get the new cases for every day we can just use diff() to subtract each succeeding columns.

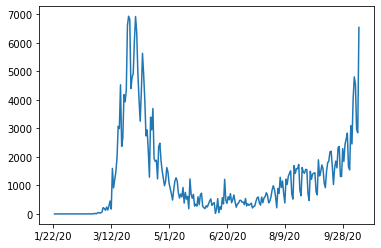

df.loc[german_index,case_columns].diff().plot()

Looks a bit noisy. If we would zoom in, we would see a pattern every seven days. The well known seasonality of a week. We can smoothen the plot by a rolling window (with the windows size of seven) and averaging (we use numpy mean here).

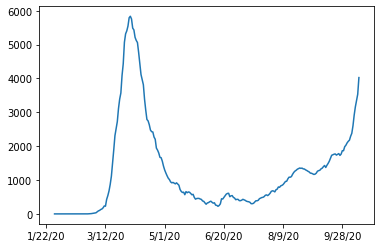

df.loc[german_index,case_columns].diff().rolling(window=7, axis=0).apply(np.mean).plot()

This looks much better now. Later in the dashboard, we make the window size and the average function interactive parameters.

After we know how the numbers can be plotted, we want to inspect the missing data.

If we drop all rows containing an nan and get the remaining unique countries, we will see the following:

df.dropna()["Country/Region"].unique()

array(['Australia', 'Canada', 'China', 'Denmark', 'France', 'Netherlands',

'United Kingdom'], dtype=object)

As all the nan values are located in the Province/State column, we cann also select the rows where this column is not nan and see the same result.

df[~pd.isna(df['Province/State'])]['Country/Region'].unique()

array(['Australia', 'Canada', 'China', 'Denmark', 'France', 'Netherlands',

'United Kingdom'], dtype=object)

A further inspection of this column reveals the following:

df['Province/State'].unique()

array([nan, 'Australian Capital Territory', 'New South Wales',

'Northern Territory', 'Queensland', 'South Australia', 'Tasmania',

'Victoria', 'Western Australia', 'Alberta', 'British Columbia',

'Diamond Princess', 'Grand Princess', 'Manitoba', 'New Brunswick',

'Newfoundland and Labrador', 'Northwest Territories',

'Nova Scotia', 'Ontario', 'Prince Edward Island', 'Quebec',

'Saskatchewan', 'Yukon', 'Anhui', 'Beijing', 'Chongqing', 'Fujian',

'Gansu', 'Guangdong', 'Guangxi', 'Guizhou', 'Hainan', 'Hebei',

'Heilongjiang', 'Henan', 'Hong Kong', 'Hubei', 'Hunan',

'Inner Mongolia', 'Jiangsu', 'Jiangxi', 'Jilin', 'Liaoning',

'Macau', 'Ningxia', 'Qinghai', 'Shaanxi', 'Shandong', 'Shanghai',

'Shanxi', 'Sichuan', 'Tianjin', 'Tibet', 'Xinjiang', 'Yunnan',

'Zhejiang', 'Faroe Islands', 'Greenland', 'French Guiana',

'French Polynesia', 'Guadeloupe', 'Martinique', 'Mayotte',

'New Caledonia', 'Reunion', 'Saint Barthelemy',

'Saint Pierre and Miquelon', 'St Martin', 'Aruba',

'Bonaire, Sint Eustatius and Saba', 'Curacao', 'Sint Maarten',

'Anguilla', 'Bermuda', 'British Virgin Islands', 'Cayman Islands',

'Channel Islands', 'Falkland Islands (Malvinas)', 'Gibraltar',

'Isle of Man', 'Montserrat', 'Turks and Caicos Islands'],

dtype=object)

A lot of islands and provinces of China:

df[df['Country/Region']=='China']

| Province/State | Country/Region | Lat | Long | ... | 10/14/20 | 10/15/20 | 10/16/20 | 10/17/20 | |

|---|---|---|---|---|---|---|---|---|---|

| 56 | Anhui | China | 31.8257 | 117.2264 | ... | 991 | 991 | 991 | 991 |

| 57 | Beijing | China | 40.1824 | 116.4142 | ... | 937 | 937 | 937 | 937 |

| 58 | Chongqing | China | 30.0572 | 107.8740 | ... | 585 | 586 | 586 | 586 |

| 59 | Fujian | China | 26.0789 | 117.9874 | ... | 416 | 417 | 417 | 417 |

| 60 | Gansu | China | 35.7518 | 104.2861 | ... | 170 | 170 | 170 | 170 |

| 61 | Guangdong | China | 23.3417 | 113.4244 | ... | 1873 | 1875 | 1877 | 1881 |

| 62 | Guangxi | China | 23.8298 | 108.7881 | ... | 260 | 260 | 260 | 260 |

| 63 | Guizhou | China | 26.8154 | 106.8748 | ... | 147 | 147 | 147 | 147 |

| 64 | Hainan | China | 19.1959 | 109.7453 | ... | 171 | 171 | 171 | 171 |

| 65 | Hebei | China | 39.5490 | 116.1306 | ... | 368 | 368 | 368 | 368 |

| 66 | Heilongjiang | China | 47.8620 | 127.7615 | ... | 948 | 948 | 948 | 948 |

| 67 | Henan | China | 37.8957 | 114.9042 | ... | 1281 | 1281 | 1281 | 1281 |

| 68 | Hong Kong | China | 22.3000 | 114.2000 | ... | 5201 | 5213 | 5220 | 5237 |

| 69 | Hubei | China | 30.9756 | 112.2707 | ... | 68139 | 68139 | 68139 | 68139 |

| 70 | Hunan | China | 27.6104 | 111.7088 | ... | 1019 | 1019 | 1019 | 1019 |

| 71 | Inner Mongolia | China | 44.0935 | 113.9448 | ... | 270 | 275 | 275 | 275 |

| 72 | Jiangsu | China | 32.9711 | 119.4550 | ... | 667 | 669 | 669 | 669 |

| 73 | Jiangxi | China | 27.6140 | 115.7221 | ... | 935 | 935 | 935 | 935 |

| 74 | Jilin | China | 43.6661 | 126.1923 | ... | 157 | 157 | 157 | 157 |

| 75 | Liaoning | China | 41.2956 | 122.6085 | ... | 280 | 280 | 280 | 280 |

| 76 | Macau | China | 22.1667 | 113.5500 | ... | 46 | 46 | 46 | 46 |

| 77 | Ningxia | China | 37.2692 | 106.1655 | ... | 75 | 75 | 75 | 75 |

| 78 | Qinghai | China | 35.7452 | 95.9956 | ... | 18 | 18 | 18 | 18 |

| 79 | Shaanxi | China | 35.1917 | 108.8701 | ... | 433 | 433 | 434 | 436 |

| 80 | Shandong | China | 36.3427 | 118.1498 | ... | 845 | 845 | 845 | 845 |

| 81 | Shanghai | China | 31.2020 | 121.4491 | ... | 1064 | 1075 | 1080 | 1085 |

| 82 | Shanxi | China | 37.5777 | 112.2922 | ... | 208 | 208 | 208 | 208 |

| 83 | Sichuan | China | 30.6171 | 102.7103 | ... | 723 | 723 | 724 | 725 |

| 84 | Tianjin | China | 39.3054 | 117.3230 | ... | 245 | 247 | 251 | 252 |

| 85 | Tibet | China | 31.6927 | 88.0924 | ... | 1 | 1 | 1 | 1 |

| 86 | Xinjiang | China | 41.1129 | 85.2401 | ... | 902 | 902 | 902 | 902 |

| 87 | Yunnan | China | 24.9740 | 101.4870 | ... | 211 | 211 | 211 | 211 |

| 88 | Zhejiang | China | 29.1832 | 120.0934 | ... | 1283 | 1283 | 1283 | 1283 |

33 rows × 274 columns

| Province/State | Country/Region | Lat | Long | |

|---|---|---|---|---|

| 69 | Hubei | China | 30.9756 | 112.2707 |

As the listing of states and provinces is very arbitrary (maybe in the beginning of the pandemic

a more detailed view on China was useful), I decided for a compromise.

I won’t display any regions just one number for one country.

For displaying this on the world map, I decided to use the coordinates of the province/state with the most cases.

For Example, for China this will be Hubei, for France it will be mainland France, as all islands and oversea territories have less cases.

Therefore, we group by ‘Country/Region’ and then select the row where the maximum was recorded on the last day (case_columns[-1]):

idx = df.groupby('Country/Region')[case_columns[-1]].transform(max) == df[case_columns[-1]]

We get one row index per country which we use to generate a dataframe with the first for columns (province, country name, gps coordinates):

coord_df = df.loc[idx,df.columns[0:4]]

To validate our operation we take a look at China and see as expected, that Hubei was chosen as epicentre of the pandemic in China:

coord_df[coord_df['Country/Region']=='China']

| Province/State | Country/Region | Lat | Long | |

|---|---|---|---|---|

| 69 | Hubei | China | 30.9756 | 112.2707 |

For the case number, we use the sum over all provinces/states, again using groupby:

df = df.groupby('Country/Region')[case_columns].agg(sum)

df

| 1/22/20 | 1/23/20 | 1/24/20 | 1/25/20 | ... | 10/14/20 | 10/15/20 | 10/16/20 | 10/17/20 | |

|---|---|---|---|---|---|---|---|---|---|

| Country/Region | |||||||||

| Afghanistan | 0 | 0 | 0 | 0 | ... | 39994 | 40026 | 40073 | 40141 |

| Albania | 0 | 0 | 0 | 0 | ... | 15955 | 16212 | 16501 | 16774 |

| Algeria | 0 | 0 | 0 | 0 | ... | 53584 | 53777 | 53998 | 54203 |

| Andorra | 0 | 0 | 0 | 0 | ... | 3190 | 3190 | 3377 | 3377 |

| Angola | 0 | 0 | 0 | 0 | ... | 6846 | 7096 | 7222 | 7462 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| West Bank and Gaza | 0 | 0 | 0 | 0 | ... | 45658 | 46100 | 46434 | 46746 |

| Western Sahara | 0 | 0 | 0 | 0 | ... | 10 | 10 | 10 | 10 |

| Yemen | 0 | 0 | 0 | 0 | ... | 2053 | 2053 | 2055 | 2055 |

| Zambia | 0 | 0 | 0 | 0 | ... | 15616 | 15659 | 15659 | 15789 |

| Zimbabwe | 0 | 0 | 0 | 0 | ... | 8055 | 8075 | 8099 | 8110 |

189 rows × 270 columns

To calculate the relative number of cases per persons in a country we need the population numbers.

The UN provides some CSV data for download.

Again, to transform the data to a format we can use, we have to do some processing.

The procedure can be found in a separate page/notebook.

To prevent “Unnamed” columns we use index_col=0.

This results in a data frame with the country name as index.

df_population = pd.read_csv('data/population.csv',index_col=0)

df_population

| Country/Region | Population | |

|---|---|---|

| Italy | Italy | 60421760 |

| Portugal | Portugal | 10283822 |

| World | World | 7594270356 |

| Rwanda | Rwanda | 12301939 |

| Bulgaria | Bulgaria | 7025037 |

| ... | ... | ... |

| Diamond Princess | Diamond Princess | 3600 |

| Holy See | Holy See | 825 |

| Taiwan* | Taiwan* | 23780000 |

| Western Sahara | Western Sahara | 595060 |

| MS Zaandam | MS Zaandam | 1829 |

268 rows × 2 columns

As the countries are the same now we can merge on the index:

df_w_pop = df.merge(df_population, left_index=True,right_index=True)

df_w_pop

| 1/22/20 | 1/23/20 | 1/24/20 | 1/25/20 | ... | 10/16/20 | 10/17/20 | Country/Region | Population | |

|---|---|---|---|---|---|---|---|---|---|

| Afghanistan | 0 | 0 | 0 | 0 | ... | 40073 | 40141 | Afghanistan | 37172386 |

| Albania | 0 | 0 | 0 | 0 | ... | 16501 | 16774 | Albania | 2866376 |

| Algeria | 0 | 0 | 0 | 0 | ... | 53998 | 54203 | Algeria | 42228429 |

| Andorra | 0 | 0 | 0 | 0 | ... | 3377 | 3377 | Andorra | 77006 |

| Angola | 0 | 0 | 0 | 0 | ... | 7222 | 7462 | Angola | 30809762 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| West Bank and Gaza | 0 | 0 | 0 | 0 | ... | 46434 | 46746 | West Bank and Gaza | 4569087 |

| Western Sahara | 0 | 0 | 0 | 0 | ... | 10 | 10 | Western Sahara | 595060 |

| Yemen | 0 | 0 | 0 | 0 | ... | 2055 | 2055 | Yemen | 28498687 |

| Zambia | 0 | 0 | 0 | 0 | ... | 15659 | 15789 | Zambia | 17351822 |

| Zimbabwe | 0 | 0 | 0 | 0 | ... | 8099 | 8110 | Zimbabwe | 14439018 |

189 rows × 272 columns

Validate with Germany:

pop_germany = df_w_pop.loc['Germany',['Population']]

pop_germany

Population 82905782

Name: Germany, dtype: object

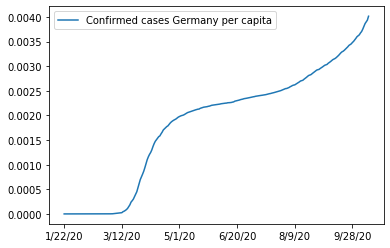

Round about 83 millions sounds right. With this number we can now plot the cases divided by population (see the changed y axis):

ax = df_w_pop.loc['Germany',case_columns].apply(lambda x: x/pop_germany).plot()

ax.legend(["Confirmed cases Germany per capita"])

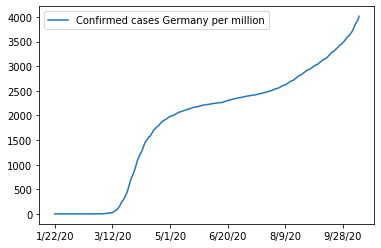

And the cases per one million inhabitants:

ax = df_w_pop.loc['Germany',case_columns].apply(lambda x: x/(pop_germany/1e6)).plot()

ax.legend(["Confirmed cases Germany per million"])

This concludes the inspection and pre-procession of the raw data and we can jump into the dashboard creation.

Doing the Bokeh

Bokeh enables you to create interactive visualization in your browser. You can create plots, tables, and other widgets to control appearance of the plots. In the case of our dashboard, we use two plots on the left (one line plot for the cases, and plotting circles in a world map). Further, we use some tables, buttons, sliders, multi-select lists etc.

These elements are arranged as follows:

In Python code, we use the layout function, which takes a list of further layout elements.

Specifically, we use column and row elements.

The first row consists of three columns, where “column 0” contains the two plots.

“Column 1” consists of two tables with html headings and the last columns has all the buttons and widgets for control.

The bottom row’s only element is a footer text line:

In Python code, we use the layout function, which takes a list of further layout elements.

Specifically, we use column and row elements.

The first row consists of three columns, where “column 0” contains the two plots.

“Column 1” consists of two tables with html headings and the last columns has all the buttons and widgets for control.

The bottom row’s only element is a footer text line:

self.layout = layout([

row(column(tab_plot, world_map),

column(top_top_14_new_header, top_top_14_new, top_top_14_cum_header, top_top_14_cum),

column(refresh_button, radio_button_group_df, radio_button_group_per_capita, plots_button_group,

radio_button_group_scale, slider, radio_button_average,

multi_select),

),

row(footer)

])

This footer for example is a simple Div element with HTML inside:

footer = Div(

text="""Covid-19 Dashboard created by Andreas Weichslgartner in April 2020 with python, bokeh, pandas,

numpy, pyproj, and colorcet. Source Code can be found at

<a href="https://github.com/weichslgartner/covid_dashboard/">Github</a>.""",

width=1600, height=10, align='center')

The buttons and selections widgets on the right also quite easy to implement. Just give a list with labels and a parameter which signals what button is active. Then add a callback function which will be triggered on clicking on the buttons.

radio_button_group_per_capita = RadioButtonGroup(

labels=["Total Cases", "Cases per Million"], active=0 if not self.active_per_capita else 1)

radio_button_group_per_capita.on_click(self.update_capita)

The onclick callback function as one argument, the new value of the active button.

In the case of the per_capita button, it is 0 for total numbers and 1 if the per_capita option is activated.

As you see in the function, the current active status is kept in class member variables.

First, I used global variables (which is fine for small bokeh plots), but gets rather ugly for more states.

So, I decided to create a class Dashboard and encapsulate all the state variables as class members.

Coming back to the callback function, once we saved the state, we update the table data (self.generate_table_new()and self.generate_table_cumulative()).

Finally, we update the line plot in the upper left with the self.update_data function.

def update_capita(self, new):

"""

callback to change between total and per capita numbers

:param new: 0 if total, 1 if per capita

:return:

"""

if new == 0:

self.active_per_capita = False # 'total'

else:

self.active_per_capita = True # 'per_capita'

self.generate_table_new()

self.generate_table_cumulative()

self.update_data('', self.country_list, self.country_list)

The second kind of callbacks are onchange functions, like in the case of the multiselect widget:

multi_select = MultiSelect(title="Option (Multiselect Ctrl+Click):", value=self.country_list,

options=countries, height=500)

multi_select.on_change('value', self.update_data)

Here, the value is a list with the active countries and the callback function has a attribute value, the old value of the list and the new:

def update_data(self, attr, old, new):

"""

repaints the plots with an updated country list

:param attr:

:param old:

:param new:

:return:

"""

_ = (attr, old)

self.country_list = new

self.source.data = self.get_dict_from_df(self.active_df, self.country_list, self.active_prefix.name)

self.layout.set_select(dict(name=TAB_PANE), dict(tabs=self.generate_plot(self.source).tabs))

However, we only use the new value (currently active countries) and discard the other two parameters.

Afterwards, we update the data source of the plot and redraw the complete plot.

The data source is defined as ColumnDataSource(data=new_dict).

In this dict each key is one plot line type and the values of this keys are the data point.

For example, germany_confirmed_daily_raw represents the confirmed cases on a daily basis without average.

Based on the active member variables these key values entries are generates by the self.get_dict_from_df function.

Normally, this would be enough to update the plot but we might also influence the axis, scaling etc, that’s why just replace the old plot with a new one.

To do this we use layout.set_select which searches for the element with the given name “TAB_PANE” and then replaces it with a new tabs element.

The tabs element in our case is the line plot in the upper left with the two tabs daily and cumulative.

You could also select the layout element by iterating through some children layout elements, e.g. layout.children[0].children[0].

I did this in the beginning, but this is rather ugly and once you update your layout you manually have to update to correct child.

Thankfully I discovered set_select and can now search by a unique name of the element.

To fill the dictionaries of the data source we use the following function:

def get_dict_from_df(self, df: pd.DataFrame, country_list: List[str], prefix: str):

"""

returns the needed data in a dict

:param df: dataframe to fetch the data

:param country_list: list of countries for which the data should be fetched

:param prefix: which data should be fetched, confirmed, deaths or recovered (refers to the dataframe)

:return: dict with for keys

"""

new_dict = {}

for country in country_list:

absolute_raw, absolute_rolling, absolute_trend, delta_raw, delta_rolling, delta_trend = \

self.get_lines(df, country, self.active_window_size)

country = replace_special_chars(country)

new_dict[f"{country}_{prefix}_{TOTAL_SUFF}_{PlotType.raw.name}"] = absolute_raw

new_dict[f"{country}_{prefix}_{TOTAL_SUFF}_{PlotType.average.name}"] = absolute_rolling

new_dict[f"{country}_{prefix}_{TOTAL_SUFF}_{PlotType.trend.name}"] = absolute_trend

new_dict[f"{country}_{prefix}_{DELTA_SUFF}_{PlotType.raw.name}"] = delta_raw

new_dict[f"{country}_{prefix}_{DELTA_SUFF}_{PlotType.average.name}"] = delta_rolling

new_dict[f"{country}_{prefix}_{DELTA_SUFF}_{PlotType.trend.name}"] = delta_trend

new_dict['x'] = x_date # list(range(0, len(delta_raw)))

return new_dict

We iterate over all selected countries and get the needed data from the global pandas dataframes.

In the key, we encode the country, the kind of data (confirmed, deaths, recovered), cumulative or daily and they kind data processing (raw, rolling average, and trend).

We replace special characters and whitespaces in the country with number character.

This is a bit of a hack, as the tooltip function only works with alphanumeric characters and there are no countries with numbers in the name.

The x_date is also a global list with the dates for the x-axis, generated as follows:

x_date = [pd.to_datetime(case_columns[0]) + timedelta(days=x) for x in range(0, len(case_columns))]

The return value of get_dict_from_df is the base of the central plotting function generate_plot.

It decodes back the information from the dict keys, generates the correct y-axis, and plots the lines specified by the

current class state.

def generate_plot(self, source: ColumnDataSource):

"""

do the plotting based on interactive elements

:param source: data source with the selected countries and the selected kind of data (confirmed, deaths, or

recovered)

:return: the plot layout in a tab

"""

# global active_y_axis_type, active_tab

keys = source.data.keys()

if len(keys) == 0:

return self.get_tab_pane()

infected_numbers_new = []

infected_numbers_absolute = []

for k in keys:

if f"{DELTA_SUFF}_{PlotType.raw.name}" in k:

infected_numbers_new.append(max(source.data[k]))

elif f"{TOTAL_SUFF}_{PlotType.raw.name}" in k:

infected_numbers_absolute.append(max(source.data[k]))

y_range = self.calculate_y_axis_range(infected_numbers_new)

p_new = figure(title=f"{self.active_prefix.name} (new)", plot_height=400, plot_width=WIDTH, y_range=y_range,

background_fill_color=BACKGROUND_COLOR, y_axis_type=self.active_y_axis_type.name)

y_range = self.calculate_y_axis_range(infected_numbers_absolute)

p_absolute = figure(title=f"{self.active_prefix.name} (absolute)", plot_height=400, plot_width=WIDTH,

y_range=y_range,

background_fill_color=BACKGROUND_COLOR, y_axis_type=self.active_y_axis_type.name)

selected_keys_absolute = []

selected_keys_new = []

for vals in source.data.keys():

if vals == 'x' in vals:

selected_keys_absolute.append(vals)

selected_keys_new.append(vals)

continue

tokenz = vals.split('_')

name = f"{revert_special_chars_replacement(tokenz[0])} ({tokenz[-1]})"

color = color_dict[tokenz[0]]

plt_type = PlotType[tokenz[-1]]

if (plt_type == PlotType.raw and self.active_plot_raw) or \

(plt_type == PlotType.average and self.active_plot_average) or \

(plt_type == PlotType.trend and self.active_plot_trend):

if TOTAL_SUFF in vals:

selected_keys_absolute.append(vals)

p_absolute.line('x', vals, source=source, line_dash=line_dict[plt_type].line_dash, color=color,

alpha=line_dict[plt_type].alpha, line_width=line_dict[plt_type].line_width,

line_cap='butt', legend_label=name)

else:

selected_keys_new.append(vals)

p_new.line('x', vals, source=source, line_dash=line_dict[plt_type].line_dash, color=color,

alpha=line_dict[plt_type].alpha, line_width=line_dict[plt_type].line_width,

line_cap='round', legend_label=name)

self.add_figure_attributes(p_absolute, selected_keys_absolute)

self.add_figure_attributes(p_new, selected_keys_new)

tab1 = Panel(child=p_new, title=f"{self.active_prefix.name} (daily)")

tab2 = Panel(child=p_absolute, title=f"{self.active_prefix.name} (cumulative)")

tabs = Tabs(tabs=[tab1, tab2], name=TAB_PANE)

if self.layout is not None:

tabs.active = self.get_tab_pane().active

return tabs

For the line colors we use a global dict with a country/color relationship. The color scheme is taken from the package colorcet (I uses a dark scheme for best contrast against a grey background).

import colorcet as cc

color_dict = dict(zip(unique_countries_wo_special_chars,

cc.b_glasbey_bw_minc_20_maxl_70[:len(unique_countries_wo_special_chars)]

)

)

Also the hover tooltip is generated from data source dict keys:

def generate_tool_tips(selected_keys) -> HoverTool:

"""

string magic for the tool tips

:param selected_keys:

:return:

"""

tooltips = [(f"{revert_special_chars_replacement(x.split('_')[0])} ({x.split('_')[-1]})",

f"@{x}{{(0,0)}}") if x != 'x' else ('Date', '$x{\%F}') for x in selected_keys]

hover = HoverTool(tooltips=tooltips,

formatters={'$x': 'datetime'}

)

return hover

The second plot, the world map, is generate with the following function:

def create_world_map(self):

"""

draws the fancy world map and do some projection magic

:return:

"""

tile_provider = get_provider(Vendors.CARTODBPOSITRON_RETINA)

tool_tips = [

("(x,y)", "($x, $y)"),

("country", "@country"),

("number", "@num{(0,0)}")

]

world_map = figure(width=WIDTH, height=400, x_range=(-BOUND, BOUND), y_range=(-10_000_000, 12_000_000),

x_axis_type="mercator", y_axis_type="mercator", tooltips=tool_tips)

# world_map.axis.visible = False

world_map.add_tile(tile_provider)

self.world_circle_source = ColumnDataSource(

dict(x=x_coord, y=y_coord, num=self.active_df['total'],

sizes=self.active_df['total'].apply(lambda d: ceil(log(d) * 4) if d > 1 else 1),

country=self.active_df[ColumnNames.country.value]))

world_map.circle(x='x', y='y', size='sizes', source=self.world_circle_source, fill_color="red", fill_alpha=0.8)

return world_map

The tile provider determines the style of the map (an overview of the styles can be found here).

Again, the data is stored in a ColumnDataSource and a dict.

The circles are a logarithmic representations of the numbers in the selected global dataframe.

One thing we have to take care of is the projection of the coordinates.

The John Hopkins data gives the coordinates in the World Geodetic System notation (a 3D representation with longitude and latitude as used in GPS).

In contrast, for the 2D plot we need a projection of the 3D coordinated to a 2D space.

The most used projection is the Mercator projection.

We can do the transformation with the pyproj package.

from pyproj import Transformer

# Transform lat and long (World Geodetic System, GPS, EPSG:4326) to x and y (Pseudo-Mercator, "epsg:3857")

transformer = Transformer.from_crs("epsg:4326", "epsg:3857")

x_coord, y_coord = transformer.transform(df_coord[ColumnNames.lat.value].values,

df_coord[ColumnNames.long.value].values)

Adding a Rest-Api

We finished the layout of our dashboard, but each time we start it will have the same countries and plot types selected. If we want to share a specific plot a Rest-Api would be neat. For example, something like the following request:

https://covid-19-bokeh-dashboard.herokuapp.com/dashboard?country=Germany&country=Finland&per_capita=True&plot_raw=False

After the URL, we append a ? and then key/value pairs concatenated with =.

Note here, that we can have have multiple values with the same key (important for the country selection).

But how do we get these key/value pairs into our dashboard? Fortunately, bokeh runs on a Tornardo webserver which has the needed functionality. We can access them as follows:

args = curdoc().session_context.request.arguments

The keys in this àrgs dict are already strings, but the values are still encoded as byte strings and we need to encode them back with the to_basestring function from Tornardo.

The overall parsing function looks like this:

def parse_arguments(arguments: dict):

"""

parse get arguments of rest api

:param arguments: as the dict given from tornardo

:return:

"""

arguments = {k.lower(): v for k, v in arguments.items()}

country_list = ['Germany']

if 'country' in arguments:

country_list = [countries_lower_dict[to_basestring(c).lower()] for c in arguments['country'] if

to_basestring(c).lower() in countries_lower_dict.keys()]

if len(country_list) == 0:

country_list = ['Germany']

active_per_capita = parse_bool(arguments, 'per_capita', False)

active_window_size = parse_int(arguments, 'window_size', 7)

active_plot_raw = parse_bool(arguments, 'plot_raw')

active_plot_average = parse_bool(arguments, 'plot_average')

active_plot_trend = parse_bool(arguments, 'plot_trend')

active_average = Average.median if 'average' in arguments and to_basestring(

arguments['average'][0]).lower() == 'median' else Average.mean

active_y_axis_type = Scale.log if 'scale' in arguments and to_basestring(

arguments['scale'][0]).lower() == Scale.log.name else Scale.linear

active_prefix = Prefix.confirmed

if 'data' in arguments:

val = to_basestring(arguments['data'][0]).lower()

if val in Prefix.deaths.name:

active_prefix = Prefix.deaths

elif val in Prefix.recovered.name:

active_prefix = Prefix.recovered

return country_list, active_per_capita, active_window_size, active_plot_raw, active_plot_average, \

active_plot_trend, active_average, active_y_axis_type, active_prefix

The results are the used to construct the dashboard:

country_list_, active_per_capita_, active_window_size_, active_plot_raw_, active_plot_average_, \

active_plot_trend_, active_average_, active_y_axis_type_, active_prefix_ = parse_arguments(args)

dash = Dashboard(country_list=country_list_,

active_per_capita=active_per_capita_,

active_window_size=active_window_size_,

active_plot_raw=active_plot_raw_,

active_plot_average=active_plot_average_,

active_plot_trend=active_plot_trend_,

active_y_axis_type=active_y_axis_type_,

active_prefix=active_prefix_)

The last thing we need to do is to call the layout function:

dash.do_layout()

Now we can start up the dashboard in our console:

bokeh serve dashboar.py

And see the result at http://localhost:5006/dashboard.

Hosting the Dashboard at Heroku

It nice to check the dashboard on our local machine, but hosting the whole thing on the internet would be great.

After some search I found Heroku, where you can host Python apps for free.

The easiest thing is to connect your Github repo with Heroku and with each push you deploy the app.

Further, you need a requirements.txt with your Python dependencies.

You can generate this file with:

pip freeze > requirements.txt

I personally use Anaconda as a package manager and at the first try my requirements.txt did not work out of the box with Heroku (they use virtual env and pip).

In the end, I created a new local conda environment and install the requirements.txt through pip to test if everything works.

Further you need to add a Procfile to your repository.

In the dashboard case, it has the following content:

`

web: bokeh serve –port=$PORT –address=0.0.0.0 –allow-websocket-origin=covid-19-bokeh-dashboard.herokuapp.com –use-xheaders dashboard.py

`

One final thing to notice, the Heroku app will be shutdown after some time and has to restart at the next request. This can take some time. So you can regularly access your app to prevent this.

Conclusion

Bokeh is a really great library to create interactive plots with all the widgets you could imagine. And all this without getting your hands dirty with javascript. It is also very good for visualizing streaming data on the fly, e.g., for monitoring systems etc. It will stay my tool of choice for these kind of tasks. For other interactive plotting I also like the wonderful Altair package. You can find the complete source code of the dashboard, notebooks, and config files at my Github and the deployed version at Heroku.